Mozilla Hubs vs the Object Network

Techie article! Scoring Mozilla's Hubs against my architectural vision

Here I’m going to compare Mozilla Hubs against The Inversion - my architectural vision behind the Object Network - so please do read that first. I don’t go into lots of detail about my vision or its justification or why it’s better, this is just about how there’s nothing out there that does what *I* want.

Mozilla Hubs

Mozilla Hubs is a Web-based virtual world technology based around glTF. It’s a good representative of its class, that also includes Third Room, Croquet, Decentraland, and others. The worlds it enables are split into three types of content: glTF for scenes, glb for avatars, and the ability to drop 2D media assets into a space. It has custom code for syncing scenes and sending updates. Like all such approaches, there’s a full page reload when you jump regions or sites.

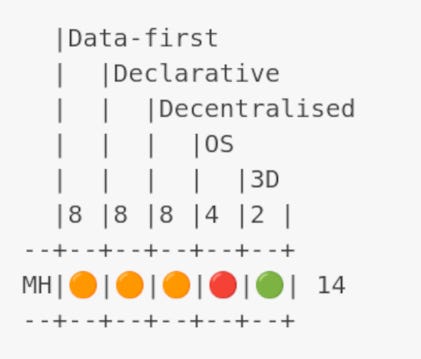

Hubs inherits the Web’s Decentralised amber score and of course gets full 3D points.

As for Data-first and Declarative, Hubs inherits the Inversion inherent in all virtual worlds: in a virtual world you see the states of all world objects around you first, and those world objects can be internally-animated - just as in 2D in spreadsheets. This is the visible aspect of an internal scenegraph of one sort or another, often deserialised from glTF.

But the Inversion of all virtual worlds is only implicit and is still locked away in the Imperative code that implements the scenegraph, etc. And as Hubs illustrates, scenes, avatars and more transient property are all handled in different ways.

|Deconstructed

| |Declarative

| | |Decentralised

| | | |OS

| | | | |3D

|8 |8 |8 |4 |2 |

--+--+--+--+--+--+

MH|🟠|🟠|🟠|🔴|🟢| 14

--+--+--+--+--+--+Differences to the Object Network

In my recent article on the Meta-Web, I showed how to fully bring out the implicit Inversion of virtual worlds, through links to and between all world objects.

As I mentioned at the end of that article, an interesting start to bringing Hubs towards the Object Network would be to simply use SOLID Pods on the servers and for all the scene data. You’d load a render script once - no need to reload everything when you jump regions or sites by URL.

Now, instead of three ways of implementing scene elements (glTF, gdb, etc), you would only have JSON objects linked together to form an explicit scenegraph. And this scenegraph is no longer internal to an instance: it’s a single, global, distributed scenegraph; you could explore a global Web of Pod data owned by different people all over the world.

You could use SOLID’s notification protocol for scene object updates and to drive animations and interactions.

Hit the subscribe button to learn more, and feel free to leave a comment or question!